Optics

Optics is the branch of physics which studies the behavior and properties of light, including its interactions with matter and the construction of instruments that use or detect it.[1] Optics usually describes the behavior of visible, ultraviolet, and infrared light. Because light is an electromagnetic wave, other forms of electromagnetic radiation such as X-rays, microwaves, and radio waves exhibit similar properties.[1]

Most optical phenomena can be accounted for using the classical electromagnetic description of light. Complete electromagnetic descriptions of light are, however, often difficult to apply in practice. Practical optics is usually done using simplified models. The most common of these, geometric optics, treats light as a collection of rays that travel in straight lines and bend when they pass through or reflect from surfaces. Physical optics is a more comprehensive model of light, which includes wave effects such as diffraction and interference that cannot be accounted for in geometric optics. Historically, the ray-based model of light was developed first, followed by the wave model of light. Progress in electromagnetic theory in the 19th century led to the discovery that light waves were in fact electromagnetic radiation.

Some phenomena depend on the fact that light has both wave-like and particle-like properties. Explanation of these effects requires quantum mechanics. When considering light's particle-like properties, the light is modeled as a collection of particles called "photons". Quantum optics deals with the application of quantum mechanics to optical systems.

Optical science is relevant to and studied in many related disciplines including astronomy, various engineering fields, photography, and medicine (particularly ophthalmology and optometry). Practical applications of optics are found in a variety of technologies and everyday objects, including mirrors, lenses, telescopes, microscopes, lasers, and fiber optics.

Contents |

History

Optics began with the development of lenses by the ancient Egyptians and Mesopotamians. The earliest known lenses were made from polished crystal, often quartz, and have been dated as early as 700 BC for Assyrian lenses such as the Layard/Nimrud lens.[2] The ancient Romans and Greeks filled glass spheres with water to make lenses. These practical developments were followed by the development of theories of light and vision by ancient Greek and Indian philosophers, and the development of geometrical optics in the Greco-Roman world. The word optics comes from the ancient Greek word ὀπτική, meaning appearance or look.[3] Plato first articulated emission theory, the idea that visual perception is accomplished by rays emitted by the eyes. He also commented on the parity reversal of mirrors in Timaeus.[4] Some hundred years later, Euclid wrote a treatise entitled Optics wherein he described the mathematical rules of perspective and describes the effects of refraction qualitatively.[5] Ptolemy, in his treatise Optics, summarizes much of Euclid and goes on to describe a way to measure the angle of refraction, though he failed to notice the empirical relationship between it and the angle of incidence.[6]

During the Middle Ages, Greek ideas about optics were resurrected and extended by writers in the Muslim world. One of the earliest of these was Al-Kindi (c. 801–73). In 984, the Persian mathematician Ibn Sahl wrote the treatise "On burning mirrors and lenses", correctly describing a law of refraction equivalent to Snell's law.[7] He used this law to compute optimum shapes for lenses and curved mirrors. In the early 11th century, Alhazen (Ibn al-Haytham) wrote his Book of Optics, which documented the then-current understanding of vision.[8][9][10]

In the 13th century, Roger Bacon used parts of glass spheres as magnifying glasses, and discovered that light reflects from objects rather than being released from them. In Italy, around 1284, Salvino D'Armate invented the first wearable eyeglasses.[11]

The earliest known telescopes were refracting telescopes, a type which relies entirely on lenses for magnification. The first rudimentary telescopes were developed independently in the 1570s and 1580s by Leonard Digges,[12] and Giambattista della Porta.[13] Their development in the Netherlands in 1608 was by three individuals: Hans Lippershey and Zacharias Janssen, who were spectacle makers in Middelburg, and Jacob Metius of Alkmaar. In Italy, Galileo greatly improved upon these designs the following year. In 1668, Isaac Newton constructed the first practical reflecting telescope, which bears his name, the Newtonian reflector.[14]

The first microscope was made around 1595, also in Middelburg.[15] Three different eyeglass makers have been given credit for the invention: Lippershey, Janssen, and his father, Hans. The coining of the name "microscope" has been credited to Giovanni Faber, who gave that name to Galileo's compound microscope in 1625.[16]

Optical theory progressed in the mid-17th century with treatises written by philosopher René Descartes, which explained a variety of optical phenomena including reflection and refraction by assuming that light was emitted by objects which produced it.[17] This differed substantively from the ancient Greek emission theory. In the late 1660s and early 1670s, Newton expanded Descartes' ideas into a corpuscle theory of light, famously showing that white light, instead of being a unique color, was really a composite of different colors that can be separated into a spectrum with a prism. In 1690, Christian Huygens proposed a wave theory for light based on suggestions that had been made by Robert Hooke in 1664. Hooke himself publicly criticized Newton's theories of light and the feud between the two lasted until Hooke's death. In 1704, Newton published Opticks and, at the time, partly because of his success in other areas of physics, he was generally considered to be the victor in the debate over the nature of light.[17]

Newtonian optics was generally accepted until the early 19th century when Thomas Young and Augustin-Jean Fresnel conducted experiments on the interference of light that firmly established light's wave nature. Young's famous double slit experiment showed that light followed the law of superposition, which is a wave-like property not predicted by Newton's corpuscle theory. This work led to a theory of diffraction for light and opened an entire area of study in physical optics.[18] Wave optics was successfully unified with electromagnetic theory by James Clerk Maxwell in the 1860s.[19]

The next development in optical theory came in 1899 when Max Planck correctly modeled blackbody radiation by assuming that the exchange of energy between light and matter only occurred in discrete amounts he called quanta.[20] In 1905, Albert Einstein published the theory of the photoelectric effect that firmly established the quantization of light itself.[21][22] In 1913, Niels Bohr showed that atoms could only emit discrete amounts of energy, thus explaining the discrete lines seen in emission and absorption spectra.[23] The understanding of the interaction between light and matter, which followed from these developments, not only formed the basis of quantum optics but also was crucial for the development of quantum mechanics as a whole. The ultimate culmination was the theory of quantum electrodynamics, which explains all optics and electromagnetic processes in general as being the result of the exchange of real and virtual photons.[24]

Quantum optics gained practical importance with the invention of the maser in 1953 and the laser in 1960.[25] Following the work of Paul Dirac in quantum field theory, George Sudarshan, Roy J. Glauber, and Leonard Mandel applied quantum theory to the electromagnetic field in the 1950s and 1960s to gain a more detailed understanding of photodetection and the statistics of light.

Classical optics

In pre-quantum-mechanical optics, light is an electromagnetic wave composed of oscillating electric and magnetic fields. These fields continually generate each other, as the wave propagates through space and oscillates in time.[26]

The frequency of a light wave is determined by the period of the oscillations. The frequency does not normally change as the wave travels through different materials ("media"), but the speed of the wave depends on the medium. The speed, frequency, and wavelength of a wave are related by the formula

where  is the speed,

is the speed,  is the wavelength and

is the wavelength and  is the frequency. Because the frequency is fixed, a change in the wave's speed produces a change in its wavelength.[27]

is the frequency. Because the frequency is fixed, a change in the wave's speed produces a change in its wavelength.[27]

The speed of light in a medium is typically characterized by the index of refraction,  , which is the ratio of the speed of light in vacuum,

, which is the ratio of the speed of light in vacuum,  , to the speed in the medium:

, to the speed in the medium:

The speed of light in vacuum is a constant, which is exactly 299,792,458 metres per second.[28] Thus, a light ray with a wavelength of  in a vacuum will have a wavelength of

in a vacuum will have a wavelength of  in a material with index of refraction n.

in a material with index of refraction n.

The amplitude of the light wave is related to the intensity of the light, which is related to the energy stored in the wave's electric and magnetic fields.

Traditional optics is divided into two main branches: geometrical optics and physical optics.

Geometrical optics

Geometrical optics, or ray optics, describes light propagation in terms of "rays". The "ray" in geometric optics is an abstraction, or "instrument", that can be used to predict the path of light. A light ray is a ray that is perpendicular to the light's wavefronts (and therefore collinear with the wave vector). Light rays bend at the interface between two dissimilar media and may be curved in a medium in which the refractive index changes. Geometrical optics provides rules for propagating these rays through an optical system, which indicates how the actual wavefront will propagate. This is a significant simplification of optics that fails to account for optical effects such as diffraction and polarization. It is a good approximation, however, when the wavelength is very small compared with the size of structures with which the light interacts. Geometric optics can be used to describe the geometrical aspects of imaging, including optical aberrations.

A slightly more rigorous definition of a light ray follows from Fermat's principle which states that the path taken between two points by a ray of light is the path that can be traversed in the least time.[29]

Approximations

Geometrical optics is often simplified by making the paraxial approximation, or "small angle approximation." The mathematical behavior then becomes linear, allowing optical components and systems to be described by simple matrices. This leads to the techniques of Gaussian optics and paraxial ray tracing, which are used to find basic properties of optical systems, such as approximate image and object positions and magnifications.[30]

Reflections

Reflections can be divided into two types: specular reflection and diffuse reflection. Specular reflection describes glossy surfaces such as mirrors, which reflect light in a simple, predictable way. This allows for production of reflected images that can be associated with an actual (real) or extrapolated (virtual) location in space. Diffuse reflection describes matte surfaces, such as paper or rock. The reflections from these surfaces can only be described statistically, with the exact distribution of the reflected light depending on the microscopic structure of the surface. Many diffuse reflectors are described or can be approximated by Lambert's cosine law, which describes surfaces that have equal luminance when viewed from any angle.

In specular reflection, the direction of the reflected ray is determined by the angle the incident ray makes with the surface normal, a line perpendicular to the surface at the point where the ray hits. The incident and reflected rays lie in a single plane, and the angle between the reflected ray and the surface normal is the same as that between the incident ray and the normal.[31] This is known as the Law of Reflection.

For flat mirrors, the law of reflection implies that images of objects are upright and the same distance behind the mirror as the objects are in front of the mirror. The image size is the same as the object size. (The magnification of a flat mirror is unity.) The law also implies that mirror images are parity inverted, which we perceive as a left-right inversion. Images formed from reflection in two (or any even number of) mirrors are not parity inverted. Corner reflectors[31] retroreflect light, producing reflected rays that travel back in the direction from which the incident rays came.

Mirrors with curved surfaces can be modeled by ray-tracing and using the law of reflection at each point on the surface. For mirrors with parabolic surfaces, parallel rays incident on the mirror produce reflected rays that converge at a common focus. Other curved surfaces may also focus light, but with aberrations due to the diverging shape causing the focus to be smeared out in space. In particular, spherical mirrors exhibit spherical aberration. Curved mirrors can form images with magnification greater than or less than one, and the magnification can be negative, indicating that the image is inverted. An upright image formed by reflection in a mirror is always virtual, while an inverted image is real and can be projected onto a screen.[31]

Refractions

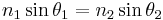

Refraction occurs when light travels through an area of space that has a changing index of refraction; this principle allows for lenses and the focusing of light. The simplest case of refraction occurs when there is an interface between a uniform medium with index of refraction  and another medium with index of refraction

and another medium with index of refraction  . In such situations, Snell's Law describes the resulting deflection of the light ray:

. In such situations, Snell's Law describes the resulting deflection of the light ray:

where  and

and  are the angles between the normal (to the interface) and the incident and refracted waves, respectively. This phenomenon is also associated with a changing speed of light as seen from the definition of index of refraction provided above which implies:

are the angles between the normal (to the interface) and the incident and refracted waves, respectively. This phenomenon is also associated with a changing speed of light as seen from the definition of index of refraction provided above which implies:

where  and

and  are the wave velocities through the respective media.[31]

are the wave velocities through the respective media.[31]

Various consequences of Snell's Law include the fact that for light rays traveling from a material with a high index of refraction to a material with a low index of refraction, it is possible for the interaction with the interface to result in zero transmission. This phenomenon is called total internal reflection and allows for fiber optics technology. As light signals travel down a fiber optic cable, it undergoes total internal reflection allowing for essentially no light lost over the length of the cable. It is also possible to produce polarized light rays using a combination of reflection and refraction: When a refracted ray and the reflected ray form a right angle, the reflected ray has the property of "plane polarization". The angle of incidence required for such a scenario is known as Brewster's angle.[31]

Snell's Law can be used to predict the deflection of light rays as they pass through "linear media" as long as the indexes of refraction and the geometry of the media are known. For example, the propagation of light through a prism results in the light ray being deflected depending on the shape and orientation of the prism. Additionally, since different frequencies of light have slightly different indexes of refraction in most materials, refraction can be used to produce dispersion spectra that appear as rainbows. The discovery of this phenomenon when passing light through a prism is famously attributed to Isaac Newton.[31]

Some media have an index of refraction which varies gradually with position and, thus, light rays curve through the medium rather than travel in straight lines. This effect is what is responsible for mirages seen on hot days where the changing index of refraction of the air causes the light rays to bend creating the appearance of specular reflections in the distance (as if on the surface of a pool of water). Material that has a varying index of refraction is called a gradient-index (GRIN) material and has many useful properties used in modern optical scanning technologies including photocopiers and scanners. The phenomenon is studied in the field of gradient-index optics.[32]

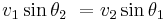

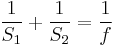

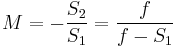

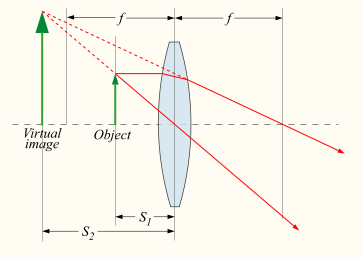

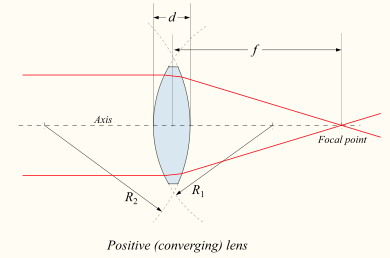

A device which produces converging or diverging light rays due to refraction is known as a lens. Thin lenses produce focal points on either side that can be modeled using the lensmaker's equation.[33] In general, two types of lenses exist: convex lenses, which cause parallel light rays to converge, and concave lenses, which cause parallel light rays to diverge. The detailed prediction of how images are produced by these lenses can be made using ray-tracing similar to curved mirrors. Similarly to curved mirrors, thin lenses follow a simple equation that determines the location of the images given a particular focal length ( ) and object distance (

) and object distance ( ):

):

where  is the distance associated with the image and is considered by convention to be negative if on the same side of the lens as the object and positive if on the opposite side of the lens.[33] The focal length f is considered negative for concave lenses.

is the distance associated with the image and is considered by convention to be negative if on the same side of the lens as the object and positive if on the opposite side of the lens.[33] The focal length f is considered negative for concave lenses.

Incoming parallel rays are focused by a convex lens into an inverted real image one focal length from the lens, on the far side of the lens. Rays from an object at finite distance are focused further from the lens than the focal distance; the closer the object is to the lens, the further the image is from the lens. With concave lenses, incoming parallel rays diverge after going through the lens, in such a way that they seem to have originated at an upright virtual image one focal length from the lens, on the same side of the lens that the parallel rays are approaching on. Rays from an object at finite distance are associated with a virtual image that is closer to the lens than the focal length, and on the same side of the lens as the object. The closer the object is to the lens, the closer the virtual image is to the lens.

Likewise, the magnification of a lens is given by

where the negative sign is given, by convention, to indicate an upright object for positive values and an inverted object for negative values. Similar to mirrors, upright images produced by single lenses are virtual while inverted images are real.[31]

Lenses suffer from aberrations that distort images and focal points. These are due to both to geometrical imperfections and due to the changing index of refraction for different wavelengths of light (chromatic aberration).[31]

Physical optics

Physical optics or wave optics builds on Huygens's principle, which states that every point on an advancing wavefront is the center of a new disturbance. When combined with the superposition principle, this explains how optical phenomena are manifested when there are multiple sources or obstructions that are spaced at distances similar to the wavelength of the light.[34]

Complex models based on physical optics can account for the propagation of any wavefront through an optical system, including predicting the wavelength, amplitude, and phase of the wave.[34] Additionally, all of the results from geometrical optics can be recovered using the techniques of Fourier optics which apply many of the same mathematical and analytical techniques used in acoustic engineering and signal processing.

Using numerical modeling on a computer, optical scientists can simulate the propagation of light and account for most diffraction, interference, and polarization effects. Such simulations typically still rely on approximations, however, so this is not a full electromagnetic wave theory model of the propagation of light. Such a full model is computationally demanding and is normally only used to solve small-scale problems that require extraordinary accuracy.[35]

Gaussian beam propagation is a simple paraxial physical optics model for the propagation of coherent radiation such as laser beams. This technique partially accounts for diffraction, allowing accurate calculations of the rate at which a laser beam expands with distance, and the minimum size to which the beam can be focused. Gaussian beam propagation thus bridges the gap between geometric and physical optics.[36]

Superposition and interference

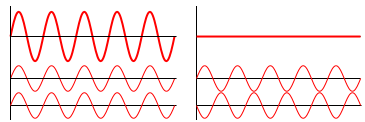

In the absence of nonlinear effects, the superposition principle can be used to predict the shape of interacting waveforms through the simple addition of the disturbances.[37] This interaction of waves to produce a resulting pattern is generally termed "interference" and can result in a variety of outcomes. If two waves of the same wavelength and frequency are in phase, both the wave crests and wave troughs align. This results in constructive interference and an increase in the amplitude of the wave, which for light is associated with a brightening of the waveform in that location. Alternatively, if the two waves of the same wavelength and frequency are out of phase, then the wave crests will align with wave troughs and vice-versa. This results in destructive interference and a decrease in the amplitude of the wave, which for light is associated with a dimming of the waveform at that location. See below for an illustration of this effect.[37]

| combined waveform |

|

|

| wave 1 | ||

| wave 2 | ||

| Two waves in phase | Two waves 180° out of phase |

|

Since Huygens's principle states that every point of a wavefront is associated with the production of a new disturbance, it is possible for a wavefront to interfere with itself constructively or destructively at different locations producing bright and dark fringes in regular and predictable patterns.[37] Interferometry is the science of measuring these patterns, usually as a means of making precise determinations of distances or angular resolutions.[38] The Michelson interferometer was a famous instrument which used interference effects to accurately measure the speed of light.[39]

The appearance of thin films and coatings is directly affected by interference effects. Antireflective coatings use destructive interference to reduce the reflectivity of the surfaces they coat, and can be used to minimize glare and unwanted reflections. The simplest case is a single layer with thickness one-fourth the wavelength of incident light. The reflected wave from the top of the film and the reflected wave from the film/material interface are then exactly 180° out of phase, causing destructive interference. The waves are only exactly out of phase for one wavelength, which would typically be chosen to be near the center of the visible spectrum, around 550 nm. More complex designs using multiple layers can achieve low reflectivity over a broad band, or extremely low reflectivity at a single wavelength.

Constructive interference in thin films can create strong reflection of light in a range of wavelengths, which can be narrow or broad depending on the design of the coating. These films are used to make dielectric mirrors, interference filters, heat reflectors, and filters for color separation in color television cameras. This interference effect is also what causes the colorful rainbow patterns seen in oil slicks.[37]

Diffraction and optical resolution

. The bright fringes occur along lines where black lines intersect with black lines and white lines intersect with white lines. These fringes are separated by angle

. The bright fringes occur along lines where black lines intersect with black lines and white lines intersect with white lines. These fringes are separated by angle  and are numbered as order

and are numbered as order  .

.Diffraction is the process by which light interference is most commonly observed. The effect was first described in 1665 by Francesco Maria Grimaldi, who also coined the term from the Latin diffringere, 'to break into pieces'.[40][41] Later that century, Robert Hooke and Isaac Newton also described phenomena now known to be diffraction in Newton's rings[42] while James Gregory recorded his observations of diffraction patterns from bird feathers.[43]

The first physical optics model of diffraction that relied on Huygens' Principle was developed in 1803 by Thomas Young in his accounts of the interference patterns of two closely spaced slits. Young showed that his results could only be explained if the two slits acted as two unique sources of waves rather than corpuscles.[44] In 1815 and 1818, Augustin-Jean Fresnel firmly established the mathematics of how wave interference can account for diffraction.[33]

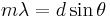

The simplest physical models of diffraction use equations that describe the angular separation of light and dark fringes due to light of a particular wavelength ( ). In general, the equation takes the form

). In general, the equation takes the form

where  is the separation between two wavefront sources (in the case of Young's experiments, it was two slits),

is the separation between two wavefront sources (in the case of Young's experiments, it was two slits),  is the angular separation between the central fringe and the

is the angular separation between the central fringe and the  th order fringe, where the central maximum is

th order fringe, where the central maximum is  .[45]

.[45]

This equation is modified slightly to take into account a variety of situations such as diffraction through a single gap, diffraction through multiple slits, or diffraction through a diffraction grating that contains a large number of slits at equal spacing.[45] More complicated models of diffraction require working with the mathematics of Fresnel or Fraunhofer diffraction.[34]

X-ray diffraction makes use of the fact that atoms in a crystal have regular spacing at distances that are on the order of one angstrom. To see diffraction patterns, x-rays with similar wavelengths to that spacing are passed through the crystal. Since crystals are three-dimensional objects rather than two-dimensional gratings, the associated diffraction pattern varies in two directions according to Bragg reflection, with the associated bright spots occurring in unique patterns and  being twice the spacing between atoms.[45]

being twice the spacing between atoms.[45]

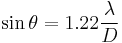

Diffraction effects limit the ability for an optical detector to optically resolve separate light sources. In general, light that is passing through an aperture will experience diffraction and the best images that can be created (as described in diffraction-limited optics) appear as a central spot with surrounding bright rings, separated by dark nulls; this pattern is known as an Airy pattern, and the central bright lobe as an Airy disk.[33] The size of such a disk is given by

where θ is the angular resolution, λ is the wavelength of the light, and D is the diameter of the lens aperture. If the angular separation of the two points is significantly less than the Airy disk angular radius, then the two points cannot be resolved in the image, but if their angular separation is much greater than this, distinct images of the two points are formed and they can therefore be resolved. Rayleigh defined the somewhat arbitrary "Rayleigh criterion" that two points whose angular separation is equal to the Airy disk radius (measured to first null, that is, to the first place where no light is seen) can be considered to be resolved. It can be seen that the greater the diameter of the lens or its aperture, the finer the resolution.[45] Interferometry, with its ability to mimic extremely large baseline apertures, allows for the greatest angular resolution possible.[38]

For astronomical imaging, the atmosphere prevents optimal resolution from being achieved in the visible spectrum due to the atmospheric scattering and dispersion which cause stars to twinkle. Astronomers refer to this effect as the quality of astronomical seeing. Techniques known as adaptive optics have been utilized to eliminate the atmospheric disruption of images and achieve results that approach the diffraction limit.[46]

Dispersion and scattering

Refractive processes take place in the physical optics limit, where the wavelength of light is similar to other distances, as a kind of scattering. The simplest type of scattering is Thomson scattering which occurs when electromagnetic waves are deflected by single particles. In the limit of Thompson scattering, in which the wavelike nature of light is evident, light is dispersed independent of the frequency, in contrast to Compton scattering which is frequency-dependent and strictly a quantum mechanical process, involving the nature of light as particles. In a statistical sense, elastic scattering of light by numerous particles much smaller than the wavelength of the light is a process known as Rayleigh scattering while the similar process for scattering by particles that are similar or larger in wavelength is known as Mie scattering with the Tyndall effect being a commonly observed result. A small proportion of light scattering from atoms or molecules may undergo Raman scattering, wherein the frequency changes due to excitation of the atoms and molecules. Brillouin scattering occurs when the frequency of light changes due to local changes with time and movements of a dense material.[47]

Dispersion occurs when different frequencies of light have different phase velocities, due either to material properties (material dispersion) or to the geometry of an optical waveguide (waveguide dispersion). The most familiar form of dispersion is a decrease in index of refraction with increasing wavelength, which is seen in most transparent materials. This is called "normal dispersion". It occurs in all dielectric materials, in wavelength ranges where the material does not absorb light.[48] In wavelength ranges where a medium has significant absorption, the index of refraction can increase with wavelength. This is called "anomalous dispersion".[31][48]

The separation of colors by a prism is an example of normal dispersion. At the surfaces of the prism, Snell's law predicts that light incident at an angle θ to the normal will be refracted at an angle arcsin(sin (θ) / n) . Thus, blue light, with its higher refractive index, is bent more strongly than red light, resulting in the well-known rainbow pattern.[31]

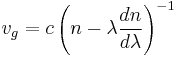

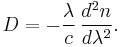

Material dispersion is often characterized by the Abbe number, which gives a simple measure of dispersion based on the index of refraction at three specific wavelengths. Waveguide dispersion is dependent on the propagation constant.[33] Both kinds of dispersion cause changes in the group characteristics of the wave, the features of the wave packet that change with the same frequency as the amplitude of the electromagnetic wave. "Group velocity dispersion" manifests as a spreading-out of the signal "envelope" of the radiation and can be quantified with a group dispersion delay parameter:

where  is the group velocity.[49] For a uniform medium, the group velocity is

is the group velocity.[49] For a uniform medium, the group velocity is

where n is the index of refraction and c is the speed of light in a vacuum.[50] This gives a simpler form for the dispersion delay parameter:

If D is less than zero, the medium is said to have positive dispersion or normal dispersion. If D is greater than zero, the medium has negative dispersion. If a light pulse is propagated through a normally dispersive medium, the result is the higher frequency components slow down more than the lower frequency components. The pulse therefore becomes positively chirped, or up-chirped, increasing in frequency with time. This causes the spectrum coming out of a prism to appear with red light the least refracted and blue/violet light the most refracted. Conversely, if a pulse travels through an anomalously (negatively) dispersive medium, high frequency components travel faster than the lower ones, and the pulse becomes negatively chirped, or down-chirped, decreasing in frequency with time.[51]

The result of group velocity dispersion, whether negative or positive, is ultimately temporal spreading of the pulse. This makes dispersion management extremely important in optical communications systems based on optical fibers, since if dispersion is too high, a group of pulses representing information will each spread in time and merge together, making it impossible to extract the signal.[49]

Polarization

Polarization is a general property of waves that describes the orientation of their oscillations. For transverse waves such as many electromagnetic waves, it describes the orientation of the oscillations in the plane perpendicular to the wave's direction of travel. The oscillations may be oriented in a single direction (linear polarization), or the oscillation direction may rotate as the wave travels (circular or elliptical polarization). Circularly polarized waves can rotate rightward or leftward in the direction of travel, and which of those two rotations is present in a wave is called the wave's chirality.[52]

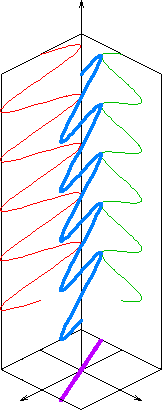

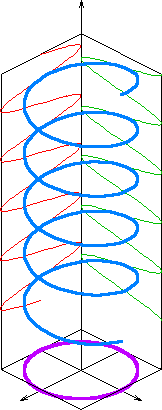

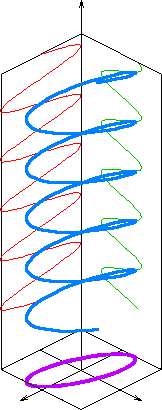

The typical way to consider polarization is to keep track of the orientation of the electric field vector as the electromagnetic wave propagates. The electric field vector of a plane wave may be arbitrarily divided into two perpendicular components labeled x and y (with z indicating the direction of travel). The shape traced out in the x-y plane by the electric field vector is a Lissajous figure that describes the polarization state.[33] The following figures show some examples of the evolution of the electric field vector (blue), with time (the vertical axes), at a particular point in space, along with its x and y components (red/left and green/right), and the path traced by the vector in the plane (purple): The same evolution would occur when looking at the electric field at a particular time while evolving the point in space, along the direction opposite to propagation.

In the leftmost figure above, the x and y components of the light wave are in phase. In this case, the ratio of their strengths is constant, so the direction of the electric vector (the vector sum of these two components) is constant. Since the tip of the vector traces out a single line in the plane, this special case is called linear polarization. The direction of this line depends on the relative amplitudes of the two components.[52]

In the middle figure, the two orthogonal components have the same amplitudes and are 90° out of phase. In this case, one component is zero when the other component is at maximum or minimum amplitude. There are two possible phase relationships that satisfy this requirement: the x component can be 90° ahead of the y component or it can be 90° behind the y component. In this special case, the electric vector traces out a circle in the plane, so this polarization is called circular polarization. The rotation direction in the circle depends on which of the two phase relationships exists and corresponds to right-hand circular polarization and left-hand circular polarization.[33]

In all other cases, where the two components either do not have the same amplitudes and/or their phase difference is neither zero nor a multiple of 90°, the polarization is called elliptical polarization because the electric vector traces out an ellipse in the plane (the polarization ellipse). This is shown in the above figure on the right. Detailed mathematics of polarization is done using Jones calculus and is characterized by the Stokes parameters.[33]

Media that have different indexes of refraction for different polarization modes are called birefringent.[52] Well known manifestations of this effect appear in optical wave plates/retarders (linear modes) and in Faraday rotation/optical rotation (circular modes).[33] If the path length in the birefringent medium is sufficient, plane waves will exit the material with a significantly different propagation direction, due to refraction. For example, this is the case with macroscopic crystals of calcite, which present the viewer with two offset, orthogonally polarized images of whatever is viewed through them. It was this effect that provided the first discovery of polarization, by Erasmus Bartholinus in 1669. In addition, the phase shift, and thus the change in polarization state, is usually frequency dependent, which, in combination with dichroism, often gives rise to bright colors and rainbow-like effects. In mineralogy, such properties, known as pleochroism, are frequently exploited for the purpose of identifying minerals using polarization microscopes. Additionally, many plastics that are not normally birefringent will become so when subject to mechanical stress, a phenomenon which is the basis of photoelasticity.[52]

In this picture, θ1 - θ0 = θi.

Media that reduce the amplitude of certain polarization modes are called dichroic. with devices that block nearly all of the radiation in one mode known as polarizing filters or simply "polarizers". Malus' law, which is named after Etienne-Louis Malus, says that when a perfect polarizer is placed in a linear polarized beam of light, the intensity, I, of the light that passes through is given by

where

- I0 is the initial intensity,

- and θi is the angle between the light's initial polarization direction and the axis of the polarizer.[52]

A beam of unpolarized light can be thought of as containing a uniform mixture of linear polarizations at all possible angles. Since the average value of  is 1/2, the transmission coefficient becomes

is 1/2, the transmission coefficient becomes

In practice, some light is lost in the polarizer and the actual transmission of unpolarized light will be somewhat lower than this, around 38% for Polaroid-type polarizers but considerably higher (>49.9%) for some birefringent prism types.[33]

In addition to birefringence and dichroism in extended media, polarization effects can also occur at the (reflective) interface between two materials of different refractive index. These effects are treated by the Fresnel equations. Part of the wave is transmitted and part is reflected, with the ratio depending on angle of incidence and the angle of refraction. In this way, physical optics recovers Brewster's angle.[33]

Most sources of electromagnetic radiation contain a large number of atoms or molecules that emit light. The orientation of the electric fields produced by these emitters may not be correlated, in which case the light is said to be unpolarized. If there is partial correlation between the emitters, the light is partially polarized. If the polarization is consistent across the spectrum of the source, partially polarized light can be described as a superposition of a completely unpolarized component, and a completely polarized one. One may then describe the light in terms of the degree of polarization, and the parameters of the polarization ellipse.[33]

Light reflected by shiny transparent materials is partly or fully polarized, except when the light is normal (perpendicular) to the surface. It was this effect that allowed the mathematician Etienne Louis Malus to make the measurements that allowed for his development of the first mathematical models for polarized light. Polarization occurs when light is scattered in the atmosphere. The scattered light produces the brightness and color in clear skies. This partial polarization of scattered light can be taken advantage of using polarizing filters to darken the sky in photographs. Optical polarization is principally of importance in chemistry due to circular dichroism and optical rotation ("circular birefringence") exhibited by optically active (chiral) molecules.[33]

Modern optics

Modern optics encompasses the areas of optical science and engineering that became popular in the 20th century. These areas of optical science typically relate to the electromagnetic or quantum properties of light but do include other topics. A major subfield of modern optics, quantum optics, deals with specifically quantum mechanical properties of light. Quantum optics is not just theoretical; some modern devices, such as lasers, have principles of operation that depend on quantum mechanics. Light detectors, such as photomultipliers and channeltrons, respond to individual photons. Electronic image sensors, such as CCDs, exhibit shot noise corresponding to the statistics of individual photon events. Light-emitting diodes and photovoltaic cells, too, cannot be understood without quantum mechanics. In the study of these devices, quantum optics often overlaps with quantum electronics.[53]

Specialty areas of optics research include the study of how light interacts with specific materials as in crystal optics and metamaterials. Other research focuses on the phenomenology of electromagnetic waves as in singular optics, non-imaging optics, non-linear optics, statistical optics, and radiometry. Additionally, computer engineers have taken an interest in integrated optics, machine vision, and photonic computing as possible components of the "next generation" of computers.[54]

Today, the pure science of optics is called optical science or optical physics to distinguish it from applied optical sciences, which are referred to as optical engineering. Prominent subfields of optical engineering include illumination engineering, photonics, and optoelectronics with practical applications like lens design, fabrication and testing of optical components, and image processing. Some of these fields overlap, with nebulous boundaries between the subjects terms that mean slightly different things in different parts of the world and in different areas of industry.[55] A professional community of researchers in nonlinear optics has developed in the last several decades due to advances in laser technology.[56]

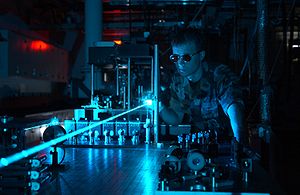

Lasers

A laser is a device that emits light (electromagnetic radiation) through a process called stimulated emission. The term laser is an acronym for Light Amplification by Stimulated Emission of Radiation.[57] Laser light is usually spatially coherent, which means that the light either is emitted in a narrow, low-divergence beam, or can be converted into one with the help of optical components such as lenses. Because the microwave equivalent of the laser, the maser, was developed first, devices that emit microwave and radio frequencies are usually called masers.[58]

The first working laser was demonstrated on 16 May 1960 by Theodore Maiman at Hughes Research Laboratories.[59] When first invented, they were called "a solution looking for a problem".[60] Since then, lasers have become a multi-billion dollar industry, finding utility in thousands of highly varied applications. The first application of lasers visible in the daily lives of the general population was the supermarket barcode scanner, introduced in 1974.[61] The laserdisc player, introduced in 1978, was the first successful consumer product to include a laser, but the compact disc player was the first laser-equipped device to become truly common in consumers' homes, beginning in 1982.[62] These optical storage devices use a semiconductor laser less than a millimeter wide to scan the surface of the disc for data retrieval. Fiber-optic communication relies on lasers to transmit large amounts of information at the speed of light. Other common applications of lasers include laser printers and laser pointers. Lasers are used in medicine in areas such as bloodless surgery, laser eye surgery, and laser capture microdissection and in military applications such as missile defense systems, electro-optical countermeasures (EOCM), and LIDAR. Lasers are also used in holograms, bubblegrams, laser light shows, and laser hair removal.[63]

Applications

Optics is part of everyday life. The ubiquity of visual systems in biology indicate the central role optics plays as the science of one of the five senses. Many people benefit from eyeglasses or contact lenses, and optics are integral to the functioning of many consumer goods including cameras. Rainbows and mirages are examples of optical phenomena. Optical communication provides the backbone for both the Internet and modern telephony.

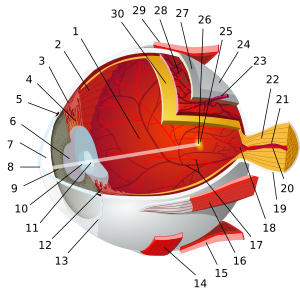

Human eye

The human eye functions by focusing light onto an array of photoreceptor cells called the retina, which covers the back of the eye. The focusing is accomplished by a series of transparent media. Light entering the eye passes first through the cornea, which provides much of the eye's optical power. The light then continues through the fluid just behind the cornea—the anterior chamber, then passes through the pupil. The light then passes through the lens, which focuses the light further and allows adjustment of focus. The light then passes through the main body of fluid in the eye—the vitreous humor, and reaches the retina. The cells in the retina cover the back of the eye, except for where the optic nerve exits; this results in a blind spot.

There are two types of photoreceptor cells, rods and cones, which are sensitive to different aspects of light.[64] Rod cells are sensitive to the intensity of light over a wide frequency range, thus are responsible for black-and-white vision. Rod cells are not present on the fovea, the area of the retina responsible for central vision, and are not as responsive as cone cells to spatial and temporal changes in light. There are, however, twenty times more rod cells than cone cells in the retina because the rod cells are present across a wider area. Because of their wider distribution, rods are responsible for peripheral vision.[65]

In contrast, cone cells are less sensitive to the overall intensity of light, but come in three varieties that are sensitive to different frequency-ranges and thus are used in the perception of color and photopic vision. Cone cells are highly concentrated in the fovea and have a high visual acuity meaning that they are better at spatial resolution than rod cells. Since cone cells are not as sensitive to dim light as rod cells, most night vision is limited to rod cells. Likewise, since cone cells are in the fovea, central vision (including the vision needed to do most reading, fine detail work such as sewing, or careful examination of objects) is done by cone cells.[65]

Ciliary muscles around the lens allow the eye's focus to be adjusted. This process is known as accommodation. The near point and far point define the nearest and farthest distances from the eye at which an object can be brought into sharp focus. For a person with normal vision, the far point is located at infinity. The near point's location depends on how much the muscles can increase the curvature of the lens, and how inflexible the lens has become with age. Optometrists, ophthalmologists, and opticians usually consider an appropriate near point to be closer than normal reading distance—approximately 25 cm.[64]

Defects in vision can be explained using optical principles. As people age, the lens becomes less flexible and the near point recedes from the eye, a condition known as presbyopia. Similarly, people suffering from hyperopia cannot decrease the focal length of their lens enough to allow for nearby objects to be imaged on their retina. Conversely, people who cannot increase the focal length of their lens enough to allow for distant objects to be imaged on the retina suffer from myopia and have a far point that is considerably closer than infinity. A condition known as astigmatism results when the cornea is not spherical but instead is more curved in one direction. This causes horizontally extended objects to be focused on different parts of the retina than vertically extended objects, and results in distorted images.[64]

All of these conditions can be corrected using corrective lenses. For presbyopia and hyperopia, a converging lens provides the extra curvature necessary to bring the near point closer to the eye while for myopia a diverging lens provides the curvature necessary to send the far point to infinity. Astigmatism is corrected with a cylindrical surface lens that curves more strongly in one direction than in another, compensating for the non-uniformity of the cornea.[66]

The optical power of corrective lenses is measured in diopters, a value equal to the reciprocal of the focal length measured in meters; with a positive focal length corresponding to a converging lens and a negative focal length corresponding to a diverging lens. For lenses that correct for astigmatism as well, three numbers are given: one for the spherical power, one for the cylindrical power, and one for the angle of orientation of the astigmatism.[66]

Visual effects

Optical illusions (also called visual illusions) are characterized by visually perceived images that differ from objective reality. The information gathered by the eye is processed in the brain to give a percept that differs from the object being imaged. Optical illusions can be the result of a variety of phenomena including physical effects that create images that are different from the objects that make them, the physiological effects on the eyes and brain of excessive stimulation (e.g. brightness, tilt, color, movement), and cognitive illusions where the eye and brain make unconscious inferences.[67]

Cognitive illusions include some which result from the unconscious misapplication of certain optical principles. For example, the Ames room, Hering, Müller-Lyer, Orbison, Ponzo, Sander, and Wundt illusions all rely on the suggestion of the appearance of distance by using converging and diverging lines, in the same way that parallel light rays (or indeed any set of parallel lines) appear to converge at a vanishing point at infinity in two-dimensionally rendered images with artistic perspective.[68] This suggestion is also responsible for the famous moon illusion where the moon, despite having essentially the same angular size, appears much larger near the horizon than it does at zenith.[69] This illusion so confounded Ptolemy that he incorrectly attributed it to atmospheric refraction when he described it in his treatise, Optics.[6]

Another type of optical illusion exploits broken patterns to trick the mind into perceiving symmetries or asymmetries that are not present. Examples include the café wall, Ehrenstein, Fraser spiral, Poggendorff, and Zöllner illusions. Related, but not strictly illusions, are patterns that occur due to the superimposition of periodic structures. For example transparent tissues with a grid structure produce shapes known as moiré patterns, while the superimposition of periodic transparent patterns comprising parallel opaque lines or curves produces line moiré patterns.[70]

Optical instruments

Single lenses have a variety of applications including photographic lenses, corrective lenses, and magnifying glasses while single mirrors are used in parabolic reflectors and rear-view mirrors. Combining a number of mirrors, prisms, and lenses produces compound optical instruments which have practical uses. For example, a periscope is simply two plane mirrors aligned to allow for viewing around obstructions. The most famous compound optical instruments in science are the microscope and the telescope which were both invented by the Dutch in the late 16th century.[71]

Microscopes were first developed with just two lenses: an objective lens and an eyepiece. The objective lens is essentially a magnifying glass and was designed with a very small focal length while the eyepiece generally has a longer focal length. This has the effect of producing magnified images of close objects. Generally, an additional source of illumination is used since magnified images are dimmer due to the conservation of energy and the spreading of light rays over a larger surface area. Modern microscopes, known as compound microscopes have many lenses in them (typically four) to optimize the functionality and enhance image stability.[71] A slightly different variety of microscope, the comparison microscope, looks at side-by-side images to produce a stereoscopic binocular view that appears three dimensional when used by humans.[72]

The first telescopes, called refracting telescopes were also developed with a single objective and eyepiece lens. In contrast to the microscope, the objective lens of the telescope was designed with a large focal length to avoid optical aberrations. The objective focuses an image of a distant object at its focal point which is adjusted to be at the focal point of an eyepiece of a much smaller focal length. The main goal of a telescope is not necessarily magnification, but rather collection of light which is determined by the physical size of the objective lens. Thus, telescopes are normally indicated by the diameters of their objectives rather than by the magnification which can be changed by switching eyepieces. Because the magnification of a telescope is equal to the focal length of the objective divided by the focal length of the eyepiece, smaller focal-length eyepieces cause greater magnification.[71]

Since crafting large lenses is much more difficult than crafting large mirrors, most modern telescopes are reflecting telescopes, that is, telescopes that use a primary mirror rather than an objective lens. The same general optical considerations apply to reflecting telescopes that applied to refracting telescopes, namely, the larger the primary mirror, the more light collected, and the magnification is still equal to the focal length of the primary mirror divided by the focal length of the eyepiece. Professional telescopes generally do not have eyepieces and instead place an instrument (often a charge-coupled device) at the focal point instead.[71]

Photography

The optics of photography involves both lenses and the medium in which the electromagnetic radiation is recorded, whether it be a plate, film, or charge-coupled device. Photographers must consider the reciprocity of the camera and the shot which is summarized by the relation

- Exposure ∝ ApertureArea × ExposureTime × SceneLuminance[73]

In other words, the smaller the aperture (giving greater depth of focus), the less light coming in, so the length of time has to be increased (leading to possible blurriness if motion occurs). An example of the use of the law of reciprocity is the Sunny 16 rule which gives a rough estimate for the settings needed to estimate the proper exposure in daylight.[74]

A camera's aperture is measured by a unitless number called the f-number or f-stop, f/#, often notated as  , and given by

, and given by

where  is the focal length, and

is the focal length, and  is the diameter of the entrance pupil. By convention, "f/#" is treated as a single symbol, and specific values of f/# are written by replacing the number sign with the value. The two ways to increase the f-stop are to either decrease the diameter of the entrance pupil or change to a longer focal length (in the case of a zoom lens, this can be done by simply adjusting the lens). Higher f-numbers also have a larger depth of field due to the lens approaching the limit of a pinhole camera which is able to focus all images perfectly, regardless of distance, but requires very long exposure times.[75]

is the diameter of the entrance pupil. By convention, "f/#" is treated as a single symbol, and specific values of f/# are written by replacing the number sign with the value. The two ways to increase the f-stop are to either decrease the diameter of the entrance pupil or change to a longer focal length (in the case of a zoom lens, this can be done by simply adjusting the lens). Higher f-numbers also have a larger depth of field due to the lens approaching the limit of a pinhole camera which is able to focus all images perfectly, regardless of distance, but requires very long exposure times.[75]

When selecting the lens to use, photographers generally consider the field of view that the lens will provide given the specifications of their camera. For a given film or sensor size, specified by the length of the diagonal across the image, a lens may be classified as

- Normal lens: angle of view of the diagonal about 50° and a focal length approximately equal to the diagonal produces this angle.[76]

- Macro lens: angle of view narrower than 25° and focal length longer than normal. These lenses are used for close-ups, e.g., for images of the same size as the object. They usually feature a flat field as well, which means that the subject plane is exactly parallel with the film plane.[77]

- Wide-angle lens: angle of view wider than 60° and focal length shorter than normal.[78]

- Telephoto lens or long-focus lens: angle of view narrower and focal length longer than normal. A distinction is sometimes made between a long-focus lens and a true telephoto lens: the telephoto lens uses a telephoto group to be physically shorter than its focal length.[79]

The absolute value for the exposure time required depends on how sensitive to light the medium being used is (measured by the film speed, or, for digital media, by the quantum efficiency).[80] Early photography used media that had very low light sensitivity, and so exposure times had to be long even for very bright shots. As technology has improved, so has the sensitivity through film cameras and digital cameras.[81]

Other results from physical and geometrical optics apply to camera optics. For example, the maximum resolution capability of a particular camera set-up is determined by the diffraction limit associated with the pupil size and given, roughly, by the Rayleigh criterion.[82]

Atmospheric optics

The unique optical properties of the atmosphere cause a wide range of spectacular optical phenomena. The blue color of the sky is a direct result of Rayleigh scattering which redirects higher frequency (blue) sunlight back into the field of view of the observer. Because blue light is scattered more easily than red light, the sun takes on a reddish hue when it is observed through a thick atmosphere, as during a sunrise or sunset. Additional particulate matter in the sky can scatter different colors at different angles creating colorful glowing skies at dusk and dawn. Scattering off of ice crystals and other particles in the atmosphere are responsible for halos, afterglows, coronas, rays of sunlight, and sun dogs. The variation in these kinds of phenomena is due to different particle sizes and geometries.[83]

Mirages are another sort of optical phenomena due to variations in the refraction of light through the atmosphere. Other dramatic optical phenomena associated with this include the Novaya Zemlya effect where the sun appears to rise earlier than predicted with a distorted shape. A spectacular form of refraction occurs with a temperature inversion called the Fata Morgana where objects on the horizon or even beyond the horizon, such as islands, cliffs, ships or icebergs, appear elongated and elevated, like "fairy tale castles".[84]

Rainbows are the result of a combination of optical effects: total internal reflection and dispersion of light in raindrops. A single reflection off the backs of an array of raindrops produces a coherent rainbow with an angular size on the sky that ranges from 40° to 42° with red on the outside. Double rainbows are produced by two internal reflections with angular size of 50.5° to 54° with violet on the outside. Because rainbows must be seen with the sun 180° away from the center of the rainbow, rainbows are more prominent the closer the sun is to the horizon.[52]

See also

- Important publications in optics

- List of optical topics

References

- ↑ 1.0 1.1 McGraw-Hill Encyclopedia of Science and Technology (5th ed.). McGraw-Hill. 1993.

- ↑ "World's oldest telescope?". BBC News. 1999-07-01. http://news.bbc.co.uk/1/hi/sci/tech/380186.stm. Retrieved 2010-01-03.

- ↑ T. F. Hoad (1996). The Concise Oxford Dictionary of English Etymology.

- ↑ T. L. Heath (2003). A manual of greek mathematics. Courier Dover Publications. pp. 181–182. ISBN 0486432319.

- ↑ Euclid (1999). Elaheh Kheirandish. ed. The Arabic version of Euclid's optics = Kitāb Uqlīdis fī ikhtilāf al-manāẓir. New York: Springer. ISBN 0387985239.

- ↑ 6.0 6.1 Ptolemy (1996). A. Mark Smith. ed. Ptolemy's theory of visual perception: an English translation of the Optics with introduction and commentary. DIANE Publishing. ISBN 0871698625.

- ↑ Rashed, Roshdi (Sept. 1990). "A pioneer in anaclastics: Ibn Sahl on burning mirrors and lenses". Isis 81 (3): 464–491. doi:10.1086/355456. ISSN 0021-1753. http://www.jstor.org/stable/233423.

- ↑ A. I. Sabra and J. P. Hogendijk (2003). The Enterprise of Science in Islam: New Perspectives. MIT Press. pp. 85–118. ISBN 0262194821. OCLC 50252039 237875424 50252039.

- ↑ G. Hatfield (1996). "Was the Scientific Revolution Really a Revolution in Science?". In F. J. Ragep, P. Sally, S. J. Livesey. Tradition, Transmission, Transformation: Proceedings of Two Conferences on Pre-modern Science held at the University of Oklahoma. Brill Publishers. p. 500. ISBN 9004091262. OCLC 234073624 234096934 19740432 234073624 234096934.

- ↑ G. Simon (2006). "The Gaze in Ibn al-Haytham". The Medieval History Journal 9: 89. doi:10.1177/097194580500900105.

- ↑ M. Bellis. "The History of Eye Glasses or Spectacles". About.com:Inventors. http://inventors.about.com/od/gstartinventions/a/glass_3.htm. Retrieved 2007-09-01.

- ↑ A. van Helden. "Galileo's Telescope". http://cnx.org/content/m11932/latest/.

- ↑ Giambattista della Porta (Natural Magick). NuVision Publications, LLC. 2005. p. 339.

- ↑ Watson, Fred (2007). Stargazer: the life and times of the telescope. Allen & Unwin. pp. 125–30. ISBN 9781741753837. http://books.google.com/?id=2LZZginzib4C&pg=PA125.

- ↑ Microscopes: Time Line, Nobel Foundation, retrieved April 3, 2009

- ↑ S. J. Gould (2000). "Ch. 2: The sharp-eyed lynx, outfoxed by Nature". The Lying Stones of Marrakech. London: Jonathon Cape. ISBN 0224050443.

- ↑ 17.0 17.1 A. I. Sabra (1981). Theories of light, from Descartes to Newton. CUP Archive. ISBN 0521284368.

- ↑ W. F. Magie (1935). A Source Book in Physics. Harvard University Press. p. 309.

- ↑ J. C. Maxwell (1865). "A Dynamical Theory of the Electromagnetic Field". Philosophical Transactions of the Royal Society of London 155: 459.

- ↑ For a solid approach to the complexity of Planck's intellectual motivations for the quantum, for his reluctant acceptance of its implications, see H. Kragh, Max Planck: the reluctant revolutionary, Physics World. December 2000.

- ↑ Einstein, A. (1967). "On a heuristic viewpoint concerning the production and transformation of light". In Ter Haar, D.. The Old Quantum Theory. Pergamon. pp. 91–107. http://wien.cs.jhu.edu/AnnusMirabilis/AeReserveArticles/eins_lq.pdf. Retrieved March 18, 2010. The chapter is an English translation of Einstein's 1905 paper on the photoelectric effect.

- ↑ Einstein, A. (1905). "Über einen die Erzeugung und Verwandlung des Lichtes betreffenden heuristischen Gesichtspunkt [On a heuristic viewpoint concerning the production and transformation of light]" (in German). Annalen der Physik 322 (6): 132–148. doi:10.1002/andp.19053220607.

- ↑ 1913. "On the Constitution of Atoms and Molecules," Philosophical Magazine 26 (Series 6): 1–25. The landmark paper laying the Bohr model of the atom and molecular bonding.

- ↑ R. Feynman (1985). "Chapter 1". QED: The Strange Theory of Light and Matter. Princeton University Press. p. 6. http://www.amazon.com/gp/reader/0691024170.

- ↑ N. Taylor (2000). LASER: The inventor, the Nobel laureate, and the thirty-year patent war. New York: Simon & Schuster. ISBN 0684835150.

- ↑ Maxwell, James Clerk (1865). "A dynamical theory of the electromagnetic field" (pdf). Philosophical Transactions of the Royal Society of London 155: 499. http://upload.wikimedia.org/wikipedia/commons/1/19/A_Dynamical_Theory_of_the_Electromagnetic_Field.pdf. This article accompanied a December 8, 1864 presentation by Maxwell to the Royal Society. See also A dynamical theory of the electromagnetic field.

- ↑ T. Koupelis and K. F. Kuhn (2007). In Quest of the Universe. Jones & Bartlett Publishers. ISBN 0763743879. http://books.google.com/?id=WwKjznJ9Kq0C&pg=PA102.

- ↑ D. H. Delphenich (2006). "Nonlinear optical analogies in quantum electrodynamics". ArXiv preprint. http://arxiv.org/abs/hep-th/0610088v1.

- ↑ Arthur Schuster, An Introduction to the Theory of Optics, London: Edward Arnold, 1904 online.

- ↑ J. E. Greivenkamp (2004). Field Guide to Geometrical Optics. SPIE Field Guides vol. FG01. SPIE. pp. 19–20. ISBN 0819452947.

- ↑ 31.0 31.1 31.2 31.3 31.4 31.5 31.6 31.7 31.8 31.9 H. D. Young (1992). University Physics 8e. Addison-Wesley. ISBN 0201529815.Chapter 35

- ↑ E. W. Marchand, Gradient Index Optics, New York, NY, Academic Press, 1978.

- ↑ 33.00 33.01 33.02 33.03 33.04 33.05 33.06 33.07 33.08 33.09 33.10 33.11 33.12 E. Hecht (1987). Optics (2nd ed.). Addison Wesley. ISBN 020111609X. Chapters 5 & 6.

- ↑ 34.0 34.1 34.2 R. S. Longhurst (1968). Geometrical and Physical Optics, 2nd Edition. London: Longmans.

- ↑ J. Goodman (2005). Introduction to Fourier Optics (3rd ed, ed.). Roberts & Co Publishers. ISBN 0974707724. http://books.google.com/?id=ow5xs_Rtt9AC&printsec=frontcover.

- ↑ A. E. Siegman (1986). Lasers. University Science Books. ISBN 0935702113. Chapter 16.

- ↑ 37.0 37.1 37.2 37.3 H. D. Young (1992). University Physics 8e. Addison-Wesley. ISBN 0201529815.Chapter 37

- ↑ 38.0 38.1 P. Hariharan (2003). Optical Interferometry (2nd ed.). San Diego, USA: Academic Press. http://www.astro.lsa.umich.edu/~monnier/Publications/ROP2003_final.pdf.

- ↑ E. R. Hoover (1977). Cradle of Greatness: National and World Achievements of Ohio's Western Reserve. Cleveland: Shaker Savings Association.

- ↑ J. L. Aubert (1760). Memoires pour l'histoire des sciences et des beaux arts. Paris: Impr. de S. A. S.; Chez E. Ganeau. p. 149. http://books.google.com/?id=3OgDAAAAMAAJ&pg=PP151.

- ↑ D. Brewster (1831). A Treatise on Optics. London: Longman, Rees, Orme, Brown & Green and John Taylor. p. 95. http://books.google.com/?id=opYAAAAAMAAJ&pg=RA1-PA95.

- ↑ R. Hooke (1665). Micrographia: or, Some physiological descriptions of minute bodies made by magnifying glasses. London: J. Martyn and J. Allestry.

- ↑ H. W. Turnbull (1940–1941). "Early Scottish Relations with the Royal Society: I. James Gregory, F.R.S. (1638-1675)". Notes and Records of the Royal Society of London 3: 22. http://www.jstor.org/stable/531136.

- ↑ T. Rothman (2003). Everything's Relative and Other Fables in Science and Technology. New Jersey: Wiley. ISBN 0471202576.

- ↑ 45.0 45.1 45.2 45.3 H. D. Young (1992). University Physics 8e. Addison-Wesley. ISBN 0201529815.Chapter 38

- ↑ Lucky Exposures: Diffraction limited astronomical imaging through the atmosphere by Robert Nigel Tubbs

- ↑ C. F. Bohren and D. R. Huffman (1983). Absorption and Scattering of Light by Small Particles. Wiley. ISBN 0471293407.

- ↑ 48.0 48.1 J. D. Jackson (1975). Classical Electrodynamics (2nd ed.). Wiley. p. 286. ISBN 047143132X.

- ↑ 49.0 49.1 R. Ramaswami and K. N. Sivarajan (1998). Optical Networks: A Practical Perspective. London: Academic Press.

- ↑ Brillouin, Léon. Wave Propagation and Group Velocity. Academic Press Inc., New York (1960)

- ↑ M. Born and E. Wolf (1999). Principle of Optics. Cambridge: Cambridge University Press. pp. 14–24. ISBN 0521642221.

- ↑ 52.0 52.1 52.2 52.3 52.4 52.5 H. D. Young (1992). University Physics 8e. Addison-Wesley. ISBN 0201529815.Chapter 34

- ↑ D. F. Walls and G. J. Milburn Quantum Optics (Springer 1994)

- ↑ Optical Computer Architectures: The Application of Optical Concepts to Next Generation Computers, Optical Computer Architectures: The Application of Optical Concepts to Next Generation Computers book by Alastair D. McAulay (1999)

- ↑ "SPIE society". http://spie.org/.

- ↑ Y. R. Shen (1984). The principles of nonlinear optics. New York, Wiley-Interscience.

- ↑ "laser". Reference.com. http://dictionary.reference.com/browse/laser. Retrieved 2008-05-15.

- ↑ "Charles H. Townes - Nobel Lecture". http://nobelprize.org/physics/laureates/1964/townes-lecture.pdf.

- ↑ C. H. Townes. "The first laser". University of Chicago. http://www.press.uchicago.edu/Misc/Chicago/284158_townes.html. Retrieved 2008-05-15.

- ↑ C. H. Townes (2003). "The first laser". In Laura Garwin and Tim Lincoln. A Century of Nature: Twenty-One Discoveries that Changed Science and the World. University of Chicago Press. pp. 107–12. ISBN 0226284131. http://www.press.uchicago.edu/Misc/Chicago/284158_townes.html. Retrieved 2008-02-02.

- ↑ "Handheld barcode scanner technologies". http://www.denso-wave.com/en/adcd/fundamental/barcode/scanner.html.

- ↑ "How the CD was developed". BBC News. 2007-08-17. http://news.bbc.co.uk/2/hi/technology/6950933.stm. Retrieved 2007-08-17.

- ↑ J. Wilson and J.F.B. Hawkes (1987). Lasers: Principles and Applications, Prentice Hall International Series in Optoelectronics. Prentice Hall. ISBN 0135236975.

- ↑ 64.0 64.1 64.2 D. Atchison and G. Smith (2000). Optics of the Human Eye. Elsevier. ISBN 0750637757.

- ↑ 65.0 65.1 E. R. Kandel, J. H. Schwartz, T. M. Jessell (2000). Principles of Neural Science (4th ed.). New York: McGraw-Hill. pp. 507–513. ISBN 0838577016.

- ↑ 66.0 66.1 D. Meister. "Ophthalmic Lens Design". OptiCampus.com. http://www.opticampus.com/cecourse.php?url=lens_design/&OPTICAMP=f1e4252df70c63961503c46d0c8d8b60. Retrieved November 12, 2008.

- ↑ J. Bryner (6-2-2008). "Key to All Optical Illusions Discovered". LiveScience.com. http://www.livescience.com/strangenews/080602-foresee-future.html.

- ↑ Geometry of the Vanishing Point at Convergence

- ↑ "The Moon Illusion Explained", Don McCready, University of Wisconsin-Whitewater

- ↑ A. K. Jain, M. Figueiredo, J. Zerubia (2001). Energy Minimization Methods in Computer Vision and Pattern Recognition. Springer. ISBN 9783540425236. http://books.google.com/?id=yb8otde21fcC&pg=RA1-PA198.

- ↑ 71.0 71.1 71.2 71.3 H. D. Young (1992). University Physics 8e. Addison-Wesley. ISBN 0201529815.Chapter 36

- ↑ P. E. Nothnagle, W. Chambers, M. W. Davidson. "Introduction to Stereomicroscopy". Nikon MicroscopyU. http://www.microscopyu.com/articles/stereomicroscopy/stereointro.html.

- ↑ Samuel Edward Sheppard and Charles Edward Kenneth Mees (1907). Investigations on the Theory of the Photographic Process. Longmans, Green and Co. p. 214. http://books.google.com/?id=luNIAAAAIAAJ&pg=PA214&dq=Abney+Schwarzschild+reciprocity+failure.

- ↑ B. J. Suess (2003). Mastering Black-and-White Photography. Allworth Communications. ISBN 1581153066. http://books.google.com/?id=7LaRPNINH_YC&pg=PT112.

- ↑ M. J. Langford (2000). Basic Photography. Focal Press. ISBN 0240515927.

- ↑ Leslie D. Stroebel (1999). View Camera Technique. Focal Press. ISBN 0240803450. http://books.google.com/?id=71zxDuunAvMC&pg=PA136.

- ↑ "Guide to macro photography". Photo.net. http://photo.net/learn/macro/.

- ↑ S. Simmons (1992). Using the View Camera. Amphoto Books. p. 35. ISBN 0817463534.

- ↑ New York Times Staff (2004). The New York Times Guide to Essential Knowledge. Macmillan. ISBN 9780312313678. http://books.google.com/?id=zqkdNwRxSooC&pg=PA109.

- ↑ R. R. Carlton, A. McKenna Adler (2000). Principles of Radiographic Imaging: An Art and a Science. Thomson Delmar Learning. ISBN 0766813002. http://books.google.com/?id=oA-eBHsapX8C&pg=PA318.

- ↑ W. Crawford (1979). The Keepers of Light: A History and Working Guide to Early Photographic Processes. Dobbs Ferry, New York: Morgan & Morgan. p. 20. ISBN 0871001586.

- ↑ J. M. Cowley (1975). Diffraction physics. Amsterdam: North-Holland. ISBN 0444107916.

- ↑ C. D. Ahrens (1994). Meteorology Today: an introduction to weather, climate, and the environment (5th ed.). West Publishing Company. pp. 88–89.

- ↑ A. Young. "An Introduction to Mirages". http://mintaka.sdsu.edu/GF/mirages/mirintro.html.

- Further reading

- M. Born and E.Wolf (1999). Principles of Optics (7th ed.). Pergamon Press.

- E. Hecht (2001). Optics (4th ed.). Pearson Education. ISBN 0805385665.

- R. A. Serway and J. W. Jewett (2004). Physics for Scientists and Engineers (6th ed.). Brooks/Cole. ISBN 0534408427.

- P. Tipler (2004). Physics for Scientists and Engineers: Electricity, Magnetism, Light, and Elementary Modern Physics (5th ed.). W. H. Freeman. ISBN 0716708108.

- S. G. Lipson (1995). Optical Physics (3rd ed.). Cambridge University Press. ISBN 0521436311.

- Fowles, Grant R. (1989). Introduction to Modern Optics. Dover Publications. ISBN 0-486-65957-7.

External links

- Textbooks and tutorials

- Optics – an open-source optics textbook

- Optics2001 – Optics library and community

- Fundamental Optics - CVI Melles Griot Technical Guide

- Wikibooks modules

|

|

- Societies

|

|||||

|

|||||||||||||||||||||||